My AI Journey: Developer Tools & Maximizing Spending Value

Back in 2014, before anyone knew what an AI coding assistant looked like, Kite (then Codota) was already pioneering the space. They launched the first ML-based code completion tool for Java. Most people forget about Kite today, but it was a genuinely revolutionary product at that time that came just a bit too early.

Then came Tabnine in 2018, which took the concept further, supporting multiple languages and offering both free and paid tiers. It was solid but still felt like autocomplete on steroids. You know what autocomplete is, right? You type three letters, and VS Code suggests the rest. Tabnine was smarter, but not fundamentally different.

Fast forward to June 29, 2021. GitHub announced Copilot, and everything changed. This wasn’t just autocomplete. It was context-aware code generation. You’d write a comment, and it would spit out entire functions. I have been using it since its nightly releases, watching it evolve month after month. It genuinely felt like we were one step closer to the AI that actually understood context and context-aware assistance.

On November 30, 2022. ChatGPT dropped. And unlike Copilot, which was built specifically for developers, ChatGPT was for everyone. Within two months, it hit 100 million users. Faster than TikTok. Faster than Instagram. It was the moment AI became impossible to ignore for the general public.

The Explosion

Fast forward to today, and honestly? Those tools feel almost quaint. We’ve come an embarrassingly long way in just a few years.

Now there’s Claude, GPT-5, Deepseek, Gemini (multiple versions), and a dozen others I’m probably forgetting. For IDEs, you’ve got Cursor, Windsurf, KiloCode, Qwen Coder, and about a thousand variations of VSCode + extensions. Then there are CLI tools like Claude Code, OpenCode, Gemini CLI, Open AI Codex; each promising to revolutionize your workflow. And that’s before we even talk about the vibe coding platforms: Lovable, V0, Bolt, Builder AI. The list goes on.

“HONESTLY, THE SELECTION IS SO VAST, YOU START FEELING LIKE YOU NEED EVERYTHING, OR NOTHING! PICK ONE ALREADY!”

— Imani Diot (Angry vibe coder yelling at ChatGPT)

I get it. The FOMO is real. Every tool claims to be faster, smarter and cheaper (spoiler: usually not all three). But here’s what I learnt after months—okay, years of trial and error.

The Knowledge Problem Nobody Talks About

Here’s something I wish someone had told me earlier: if you don’t know what you’re doing, AI is not the solution.

I’m gonna say it. So pardon my language.

If you are using AI because you don’t know how it works, and think AI can help you, please don’t. That’s literally just a blind leading another blind off a cliff. You’re a moron asking a hallucinating robot to solve problems you don’t understand. It’s not collaboration; it’s mutual incompetence. Two dumbasses in a room confidently spouting bullshit at each other and somehow expecting that to magically work out. Spoiler alert: it won’t. You’re both just gonna crash and burn together, and honestly, you deserve it.

When you use AI for something completely outside your wheelhouse, you spend 70% of your time correcting the AI. “No, not that. Like this.” Then it goes in a weird direction. Back to square one. It’s exhausting, and your token usage balloons while you’re teaching it.

But when you’re using AI for something you already understand? You can guide it at once. You know what’s wrong. You know what you need. One prompt, maybe two tweaks, and you’re done. The experience is completely different.

If you don’t know something, turn off the AI. Learn it. It may take hours, days, heck even weeks. But learn it. That way when the AI hits a wall, you are there to rescue it.

The problem is, most people recommend tools without acknowledging this reality. They show you a polished demo. They don’t show you the 20 failed attempts before the one that worked.

The Consistency Issue (And Why It Matters)

Another thing that’s borderline taboo to mention: LLMs are not deterministic.

Even in 2025, even with the “latest and greatest” models, you can ask the same question to the same LLM at the same time and get completely different outputs. The models are constantly being tweaked, optimized and sometimes degraded. Anthropic’s been pushing updates. OpenAI has been tinkering. Gemini had multiple versions that got retired mid-year. DeepSeek is catching up fast.

You can’t rely on AI completely. You have to stay involved, stay sharp and stay skeptical. That sounds negative, but it’s just reality, and it’s actually empowering once you accept it.

The Cost Tsunami

Okay, let’s talk money. Because this is where things get real.

ChatGPT Plus is $20/month. Claude Pro is $20/month. Cursor’s Pro plan is $20/month, but heavy users say it burns through credits way faster than advertised. GitHub Copilot is $10/month and v0 is $20/month (honestly, at this point I’m convinced these guys have a kink for the number 20. Except Microsoft)

If you are a student or a career dev in a reputable company there’s a good chance you can access the above at a cheaper price or even for free. Please check with your organization to see if you are eligible.

Here’s the sticker shock:

- Just ChatGPT Plus: $20/month

- Add Claude Pro: another $20/month

- Add Cursor Pro: another $20/month

- Add v0: another $20/month

We’re already at $80/month just for chat interfaces and IDEs. And we haven’t even talked about CLI tools or specialized stuff yet.

In my case, if we convert it to Sri Lankan rupees? That’s around 24,000 LKR per month. Just for the basics.

Important Note: Pricing as of October 21, 2025. These rates are current today but may change with future model releases and vendor updates.

Building My Stack (The Cheap Way)

After a lot of trial and error—and honestly, a lot of wasted money; I figured out a system that doesn’t break the bank. Also please note: you might not need all of it. Depending on your workflow, your needs, and what your organization provides, your stack could look completely different. This is not a step-by-step follow guide; this is to give you an idea about what I learnt from my experience.

IDE Setup: The Foundation

I use Neovim as my primary IDE with GitHub Copilot set up as my LSP. It’s not for everyone, and that’s okay. Some people work best in a traditional editor environment. If that’s you, don’t force yourself to use Vim just because I do.

When I’m doing agentic work—where I need a smarter, more integrated experience—I switch to VSCode + GitHub Copilot.

Here’s something important: if you get Copilot through your school or company, check what’s actually available. I’ve seen organizations where the latest model versions just… aren’t there. Copilot delivers most of the newest frontier LLMs as soon as they drop for individual paid subscribers, but managed versions are often on older iterations. It’s worth asking your IT team about this.

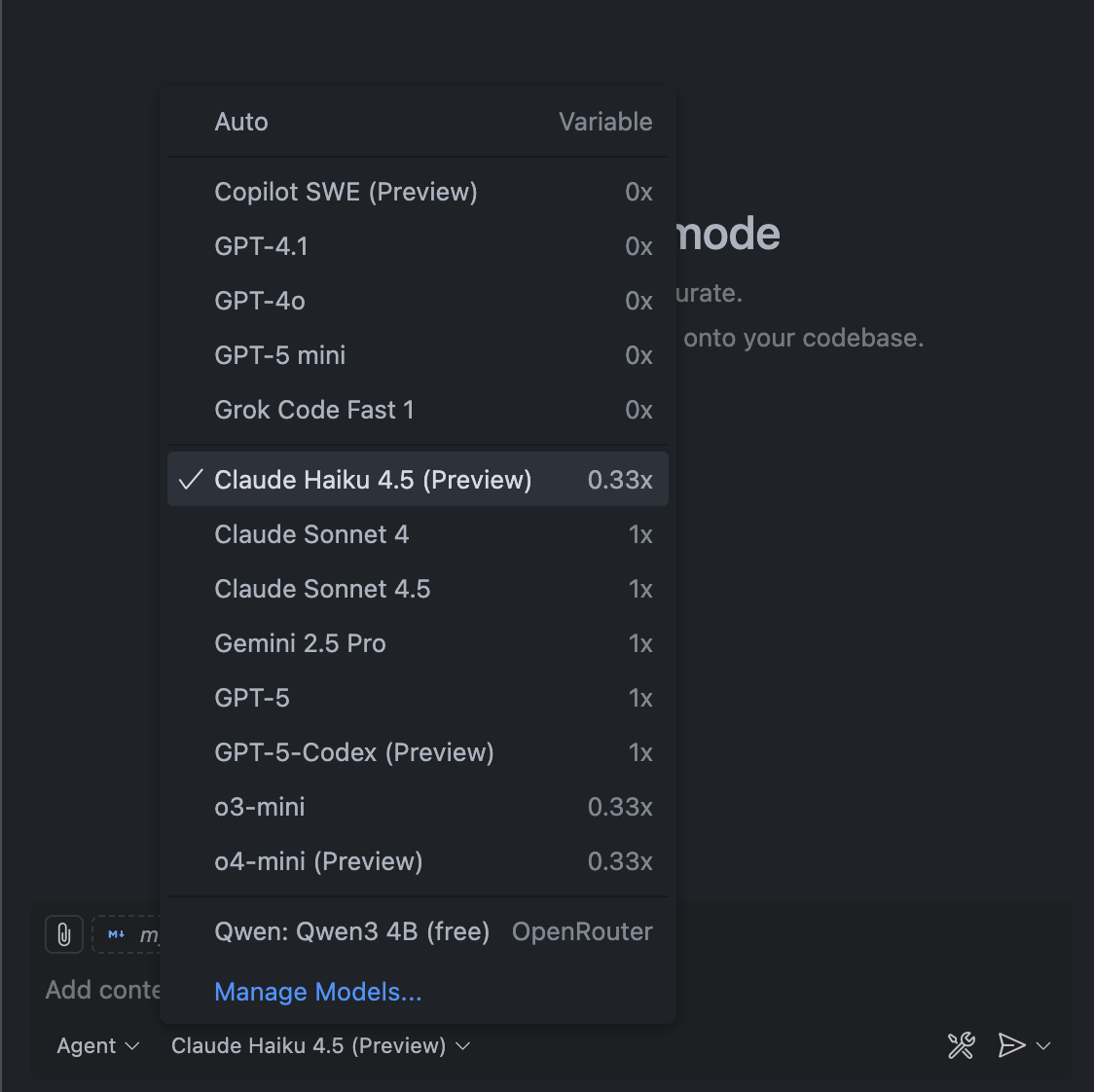

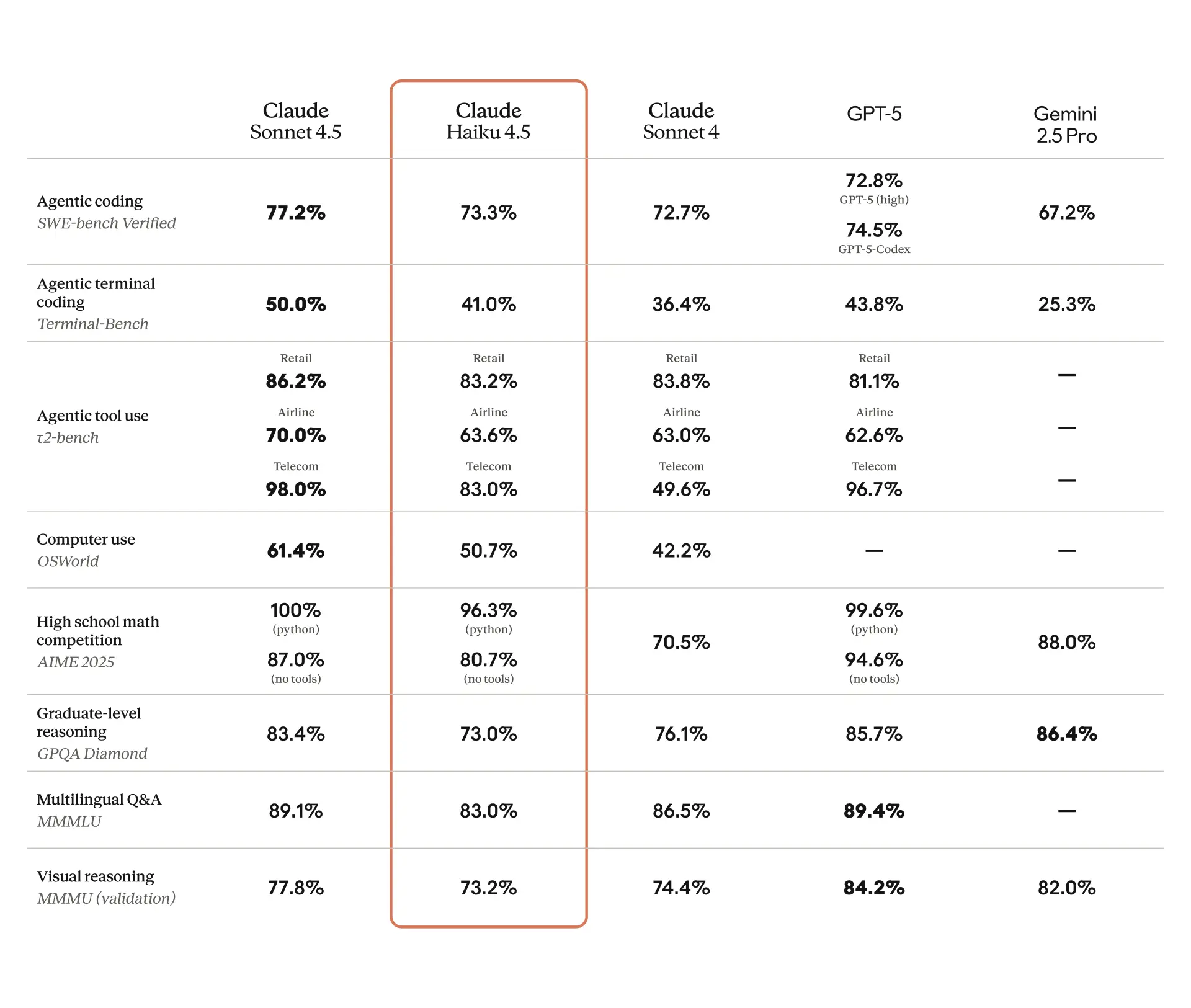

In my Copilot subscription, the model I use 99% of the time now is Claude Haiku 4.5. At just 0.33 usage per premium request, it’s absurdly cost-effective. And here’s the kicker: it’s almost always better than Claude Sonnet 4.0 and only slightly falls short of Claude Sonnet 4.5 for most tasks. You can read in detail about Haiku 4.5 from Anthropic’s official release statement.

Here’s the Anthropic benchmark on Haiku 4.5

For UI generation, though? GPT-5 or GPT-5-Codex all the way. OpenAI’s models are miles ahead on UI work compared to literally everyone else’s state-of-the-art frontier models. It’s not even close.

Cost so far:

- IDE: Free (both Neovim and VSCode are free)

- GitHub Copilot: $10/month

Chat Apps: The Aggregator Play

You’re probably already using ChatGPT free, and that’s fine. But if you’re paying $20/month or constantly hitting the free tier limits, there’s a better option.

Enter T3 Chat.

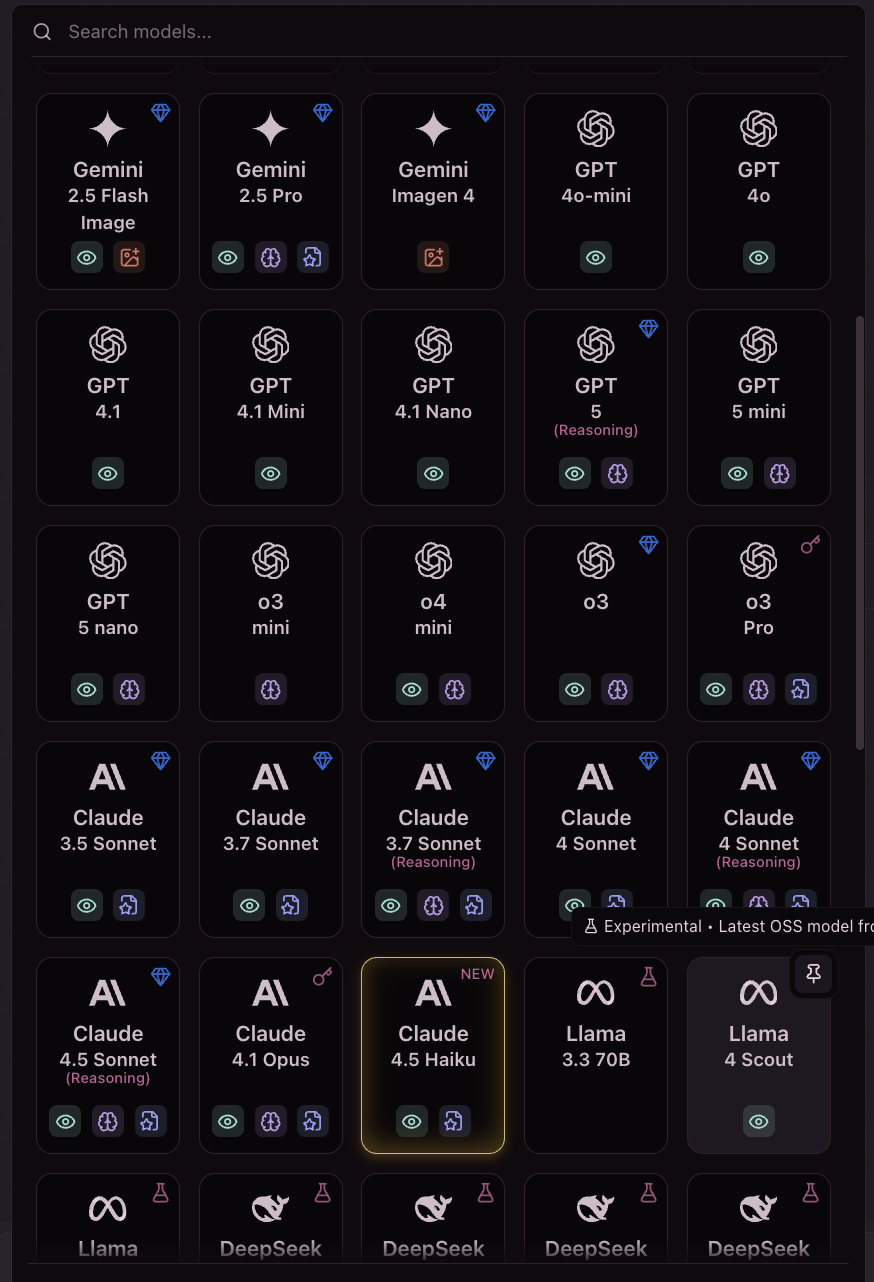

I’m not exaggerating when I say this changed my workflow. T3 Chat is an AI aggregator. You get access to basically all the models on the internet (GPT-5, Claude, DeepSeek, Gemini, etc.) in a single interface. It’s the fastest chat app I’ve ever used. And I mean that literally. If you’re using GPT models inside T3 Chat, it’s faster than using OpenAI’s own website.

For $8/month, you get:

- Access to all major models

- 1,500 regular requests per month (which is honestly generous—I’m a heavy user and rarely hit this)

- 100 premium requests for expensive models like Claude or image generation

- It’s just… fast

There are discount codes floating around too. The first month often costs just $1.

Cost: $8/month

CLI Tools: The Power Move

If you work in the terminal like I do, CLI tools just feel natural. You don’t need them, but if you do use the CLI for your dev work, they’re incredibly efficient.

I recommend two main tools: Claude Code and OpenCode. Both are solid options. You genuinely can’t go wrong with either.

Now, you might be thinking: “Why would I use Claude Code? Claude plans are so expensive. And to use OpenCode, I have to purchase APIs from other providers anyway.” Fair point. But here’s the thing. OpenCode Zen is a bit generous because they’re providing Grok Code Fast 1 and Code Supernova models for free (at least for now). That changes the equation significantly. You get powerful, capable models without the API cost overhead.

But here’s where it gets interesting. Z.AI.

From my experience, Z.AI’s GLM models are just as powerful as Claude models for a fraction of the cost. If you buy their GLM Coding plan, you can use it with either Claude Code or OpenCode. They’ve got detailed setup guides at z.ai/devpack/tool/claude.

I bought their Lite plan. It’s the cheapest option, and honestly, it’s more than enough for 99% of the time because it’s very unlikely you’ll hit those limits (it still provides 3x more usage than Claude’s $20/m plan).

Here’s the pricing breakdown: $3/month for the first month, then $6/month, or you can grab the quarterly plan for $9 for the first three months, then $18 for every three months after that.

Cost: $3/month (first month), $6/month after, or $9/quarter (then $18/quarter)

UI Generation: Pick Your Vibe

For UI stuff, any of the Vibe coding platforms work fine for demos or mockups. But v0 is genuinely generous with its free plan, and the output is actually usable. That matters.

Cost: Free (with generous limits)

The Final Monthly Tally

Here’s what my actual spending looks like:

- GitHub Copilot: $10/month

- T3 Chat: $8/month

- GLM Coding Lite: $3/month (first month), $6/month after

Total: $21/month (or $24/month after the first month)

That’s literally the cost of one ChatGPT Plus subscription. For that same $20-ish, I have:

- A fully agentic working IDE (NeoVim/VSCode + Copilot)

- Full access to every major AI model through T3 Chat

- A CLI tool as powerful as Claude Code with Claude Models

- Free UI generation tools

Compare that to what you’d pay if you subscribed to everything individually:

- ChatGPT Plus: $20

- Claude Pro: $20

- Cursor Pro: $20

- Gemini Advanced: $20 (or $19.99 technically)

We’re talking $80+ versus $21. That’s more than a 75% saving.

Important Note: These prices are as of October 21, 2025, and may change with future model releases. Always verify current pricing on official websites before making decisions. Also, these are just my recommendations based on my specific workflow—find what works best for yours.

The Reality Check

Let me be clear about something: you probably don’t need to spend anything.

If you can get by on free plans, you should. Most of the time, free tiers are actually pretty generous. It’s only when you’re a moderate to heavy user like me that paying makes sense.

Also, not everyone works the way I do. You might not use a CLI. So CLI-based AI tools might not make any sense to you at all. You might prefer a different IDE entirely. You might not need agentic coding at all. And that’s completely fine. Don’t force yourself to replicate my stack just because it works for me.

The lesson here is: experiment, track your actual usage, and build a stack around your real needs—not marketing hype.

Image Generation: The Gemini Surprise

Oh, and one more thing I almost forgot: Gemini Nano banana image generation.

Honestly, image generation from Gemini is one of those weird bright spots. The Nano model with banana optimization is surprisingly good for free image generation, and it’s a legitimate tool I use regularly. Everything else about Gemini feels like they’re way behind (and let’s be real, they seriously need Gemini 3.0 because right now their text models are significantly behind the competition). But the image stuff? That’s their strength.

The Closing Thought

Building an AI stack in 2025 isn’t about having the most tools. It’s about having the right tools, used well, without bleeding money you don’t have to spend.

Start with free. Get good at one thing. Then add paid tools only when they genuinely solve a problem you’re facing. And remember: the tool isn’t the bottleneck. You are. Knowledge, judgment and knowing what you’re doing matter way more than the fanciest LLM.

Because here’s the uncomfortable truth: an expensive AI using a mediocre prompt will usually lose to a cheap AI with a brilliant prompt. And a brilliant prompt requires you to actually know what you’re doing.

So maybe start there.